Here's how long my Surface Pro 4 (Core-i7 256GB SSD) takes to extract Firefox's source tarball on Windows:

#UNTAR WINDOWS ARCHIVE#

you should also see that it now only takes < 3 mins (depending on your hardware) to extract the archive above. This improvement was deployed live by the Defender team last weekend, so if your machine is up to date with its Defender signatures, etc. The team implemented and tested a fix in their signatures & scanning engine, adding tar to the same heuristic, and extraction of the test case - Firefox's source archive - dropped from ~31 mins to ~3 mins!

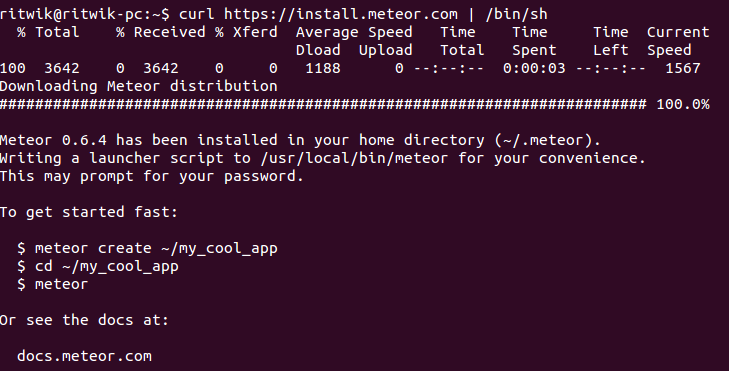

wherein it defers scanning of the extracted files until after the extraction completes. This was somewhat surprising since Defender already has special-case heuristics for archiving tools like 7Zip, WinZip, etc. It turns out that Defender was synchronously scanning each file as it was extracted, significantly impacting tar's ability to extract files quickly. Last week, I shared this issue with Defender, IO, and NTFS partner teams and we worked together to repro, measure, trace, and analyze the issue with tar taking far longer than expected to extract a large source archive on Windows. and the fix we deployed during last weekend! What we did I have just edited the title of this issue to accurately reflect the reported issue. Windows & Linux (WSL2) tests were run on a Surface Book 1/core i5/8gb/256gb SSD. even browsers like Chrome/Edge have caches with lots of files in it.apps like VSCode that need to deal with lots of files in the background.building apps/websites (webpack, C/C++ compilers.).

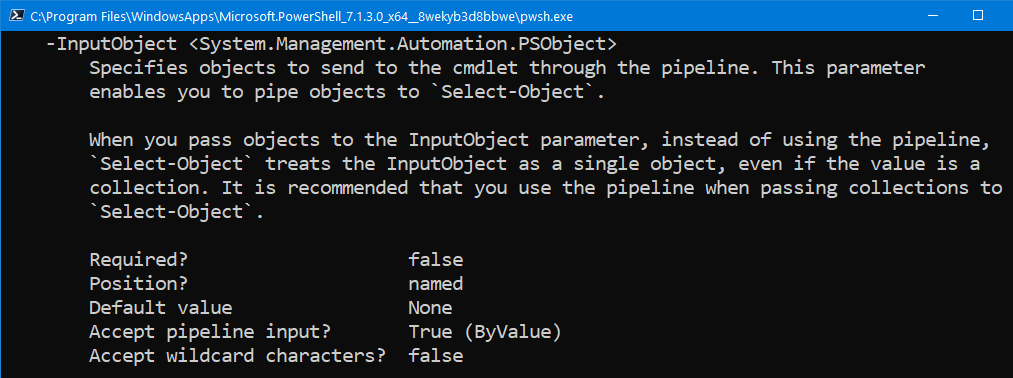

installing dev packages, like npm, ruby gems.I am sure that improving these file operations will benefit to a lot of different Windows use cases: Windows (PowerShell): Measure-Command.Mac & Linux: time tar -xf firefox-40.0.source.tar and time rm -Rf mozilla-release.

#UNTAR WINDOWS WINDOWS 10#

Windows 10 (protection on, folder excluded) I added macOS results (running on a slow MacBook with a core m3) as a comparison. I know it's an extreme case, but it's not rare having to deal with thousand of small files when working with npm, git, development, etc. It's a 852mb file that flattens to a directory containing 119 954 files. tar file and all tests were done using this file as a source. Since it's a tar.bz2 file, I first uncompressed the. To show how slow Windows is at creating lots of small files, I downloaded the Firefox sources. C: SSD 128GB / 512GB)Ĭreating multiple small files is very slow: Windows Defender's real-time protection appears to makes things even slower but even when it's disabled Windows appears to lag behind Linux and macOS when handling multiple small files.

0 kommentar(er)

0 kommentar(er)